Wrap-up

Congratulations to Teammate's Sixth Birthday Implementation for being the first to discover that all they need to succeed is to have TEAMMATES IN THE GAME OF LIFE! They completed the hunt in just a little over 27 hours at 9:17:43 pm EDT on Saturday, October 24th.

We would like to thank all the partygoers who attended our very first event! In total, 414 teams solved at least 1 puzzle, 268 teams finished Three-in-a-Row, 198 teams unlocked the Playmate, and 61 teams finished the puzzlehunt. We would also like to give shout-outs to the following teams:

- ✅✅✅haha answer checker go brrr❄️❄️❄️ for getting the first solve on Three-in-a-Row

- Teammate's Sixth Birthday Implementation for the first solve on Party Time

- Eggplant Parms for getting the first solve on Alone? Play Along

- Juicy karaage number 1! Wow! ~♪♪ for the first solve on Cardboard

Also, congratulations to The One and Only for finishing with the fewest incorrect guesses (44), The Mystery Machine for finishing with just 39 solves, and the 8 teams who finished hintless. The last team to finish was Sleep Deprivation, solving the metameta 10 minutes before the hunt officially ended.

Theme & Story

Matt & Emma’s Birthday Bash was themed around a childrens’ birthday party in celebration of 6 years since the inception of our team (and our personas, Matt Amee and Emma Tate). Teams were invited to join in on party festivities (i.e. puzzles), eat cake, and search for a mysterious present from Matt and Emma’s grandparents.

Upon discovering the TOY CHEST, our guests were transported into a fantastical world consisting of three islands inspired by toys (Matropolis), tabletop games (Tabletop Island), and video games (Pixel Paradise). By exploring these three islands, Matt, Emma, and the guests solved puzzles associated with various toy and game characters. Along the way, they also found and unlocked games for their Ninteamdo Playmate. Winning these games earned the players badges associated with puzzles in the world.

Ultimately, the guests made their way to a castle on each of the three islands. After solving the metapuzzle at each castle, they unlocked the final puzzle on their Playmate which made use of all the badges and all the puzzles in the world. Upon solving this metameta, they discovered that all of the friends they met along the way were TEAMMATES IN THE GAME OF LIFE.

Hunt Structure

Rounds

Matt and Emma’s Birthday Bash had two parts: a Birthday round followed by three Island rounds in the Toy Chest world that ran in parallel. Each round consisted of 8 regular puzzles and one metapuzzle. The Toy Chest rounds culminated in a metameta using the 24 answers.

In parallel to the Toy Chest rounds, teams were able to play games on the Ninteamdo Playmate. This was designed to act as a series of interactive breaks that encouraged teamwork.

Unlocks

The unlock structure for the Birthday round was straightforward. Teams started with 3 puzzles, and unlocked 1 or 2 new puzzles per solve.

The unlock structure in the Toy Chest round was more complex, as teams unlocked puzzles in three rounds simultaneously.

Teams started with 2 unlocked puzzles in each round. As teams solved puzzles, more puzzles would unlock according to all of the following constraints:

- After 6 solves in a round, the meta would unlock.

- After 5 solves in a round, the three remaining puzzles in the round would unlock.

- Every round would have at least 2 unsolved puzzles unlocked (until there were no more left to unlock).

- Variable unlocks: these unlocked a new puzzle in a round chosen by the following priorities:

- Fewest puzzles unlocked

- Most puzzles solved

- Tabletop Island Pixel Paradise Matropolis

A variable unlock was granted at 4 combined Toy Chest solves. For every 2 solves beyond the 6 necessary to unlock a meta, one more variable unlock was granted. This could lead to a maximum of 3 variable unlocks.

This format resulted in a progression where the puzzle width was easily tunable and was also smooth. Teams started with a width of 6, and would usually progress to a width of 7-8.

The Playmate and first game were unlocked after 4 solved puzzles in the Toy Chest, with each successive game unlocked after 2 more puzzle solves. For the leaderboard, completions of individual games counted towards solves.

In earlier iterations, we started with a system based on 2015 MIT Mystery Hunt’s DEEP and 2018 GPH cookies, where unlock points were tracked for each round and puzzles would contribute points to each round. However, in practice, point thresholds resulted in “bursty” unlocks where solved puzzles would often unlock either no new puzzles or 2 or more puzzles.

Why?

Motivation

During MIT Mystery Hunt 2020, we received a phone call telling us that we were doing well in the hunt, and asking if we were ready to potentially write next year’s Mystery Hunt. Even though we didn’t end up winning, we had the sobering realization that a large fraction of our team didn’t have much puzzle-writing experience, and we were rather ill-prepared to write next year’s Mystery Hunt if we did win.

We decided afterwards that we would run our own online puzzlehunt this year to gain some experience and decide if we would want to run a Mystery Hunt in the future. Furthermore, since much of the teammate Mystery Hunt team only gathered together once a year, working on a longer-term project together helped us get to know each other better outside the context of the single weekend of Mystery Hunt!

Philosophy

Difficulty & Size

Our original plan was to create a hunt easier than Galactic Puzzle Hunt. After an initial survey of interested teammates, we agreed to write a hunt of roughly 20-30 puzzles. However, as we continued to develop the hunt, the scope increased to a 24-puzzle Toy Chest round and a shorter, 8-puzzle Birthday round. As we created metas and expanded the Playmate concept, this number continued to go up.

We decided that the increasing size of our puzzlehunt was alright because there were several other hunts that filled the niche of small to mid-sized online hunts (Puzzle Potluck 3, REDDOT hunt, several high-school run hunts, and DP hunt). This also contributed to our decision to allow more difficult puzzles. However, the scope creep did push back the start date of the hunt a few times (from “summer” to “late summer” to “October”).

Experience

Several of us who were interested in writing did not have prior experience writing or running a puzzlehunt. We also wanted this hunt to be a place for these authors to get puzzle writing experience. To support this, the meta and metameta structures were designed to have flexible answer constraints that could easily be imposed on top of puzzles authors were excited about. Furthermore, before we had decided on our metas, we ran an internal “potluck” for authors to share drafts of ideas, get early feedback, and receive priority for answer selection. Some puzzles (Pictionary, 20/20 Vision, Island Metric, and Union of Intersections) were further edited and included in Teammate Hunt. In the end, 8 of our 21 authors published their first puzzles in this hunt!

Remote Solving

Due to COVID, we prioritized the remote solving experience. Notably, we avoided physical puzzles, such as those requiring a printer or scissors, since not everyone would have simultaneous access to the same printed version. Similarly, we favored making the puzzles look good on the site and improving the clipboard feature and did not spend much time checking printer-friendliness.

Organization

We had a fairly flat organizational structure, consisting of puzzle authors and a small group of editors (whose responsibilities involved editing puzzles, organizing testsolves, and other administrative miscellany). Selection of editors was done by self-nomination, and most of our editors had prior puzzle-writing/editing experience, having previously written for GPH and/or PuzzlehuntCMU.

We had weekly meetings with average attendance of around 15 people. Most meetings, we would discuss hunt-wide details such as format, theme/story, hints, etc. as well as puzzle status updates before breaking up into multiple testsolving and writing groups. For the first few months, most of the work was done at these meetings, but as the deadline approached, we started to spend more time outside of these meetings to get things done - it was not uncommon for us to have testsolves scheduled over the weekend. A month before the hunt started (2 weeks before the first full-hunt testsolve), a sizeable portion of hunt staff took a week off work to work full-time on tasks ranging from needing to construct some of the last puzzles (Now I Know My 🐝🐝🐝s, Alchemystery Walkthrough v0.01, Drip Quote) to postprodding completed puzzles, writing solutions, and a ton of Playmate and web development work (see below).

We used a fork of GPH’s Puzzlord as a tool for organizing puzzles, testsolves, and miscellaneous tasks like postprodding and factchecking, Google Drive to store puzzle drafts, and Discord for voice and text communication.

Writing

Theme Selection

Theme selection occurred in mid-February, after several proposals were made. The winning proposal was “Toys and Games”, a variant which eventually morphed into the Birthday Bash theme you’re familiar with. Some teams asked us to share what else we considered, but we won’t be divulging any rejected proposals in the event that we want to revive some of them in the future :)

Overall, we were primarily focused on issues like puzzle balance and logistics, and as a result less effort was put into developing the story. Instead, we tried to keep things simple. Rather than introducing elaborate plot twists, we strongly incorporated the general theming of a toys round, a tabletop round, and a video games round into our artwork, Playmate, and meta designs.

An interesting idea for a potential future hunt would be to appoint a “theme head” (with appropriate responsibilities), but this is something that did not come up as a top priority for us as first-time hunt runners.

Metapuzzles

Next, we spent the month of March coming up with ideas for all metapuzzles (including Three-in-a-Row and Badge Collection). In total, we had around 7 or 8 proposals for metametas, some of which were cut and/or turned into regular puzzles (see The Seven Empires). Given the number of first-time puzzle authors, we wanted our metas to have a decent amount of freedom in answer choices. This was a heavy influence in the design of Three-in-a-Row, as well as Party Time, the latter of which was written after two earlier meta proposals imposed strict constraints on feeder answers (an early version of Alone? Play Along, and the meta version of The Seven Empires). This strategy worked out reasonably well for us from a logistical point of view; we were able to assign thematic answers to puzzles at a much higher rate due to the flexibility granted to us. However, for the final puzzles, constraints imposed by Badge Collection severely limited the possible answers.

The decision to use the 24 normal puzzles as feeders into Badge Collection (as opposed to the 3 metas, as per convention in similarly structured hunts) was influenced by a similar argument - we wanted our meta writers to essentially have free reign over the format of their proposed metapuzzle. To keep flexibility in our yet-to-be-finalized story, the only constraint we imposed on the metas was that the answer had to be a reasonable command or action. This also allowed us to parallelize meta writing.

However, the decision to enforce multiple loose answer constraints was not without cost. During writing and initial testsolves, the meta authors intended some answers to be easy break-in points, such as TREANT PROTECTOR (PLAY TRUANT/9 letters) for Alone? Play Along. One by one, the original proposed answers in both Alone? Play Along and Party Time were replaced with answers that better fit puzzle themes or metameta constraints. This left these metas much harder than initially intended when we ran full-hunt testsolves with the new answers. This was affectionately referred to in internal discussions as the "Rotting Ship of Theseus" problem, as the new answers felt a lot more unwieldy and forced than the counterparts they replaced.

Puzzles

All metas were finalized in April and puzzle writing (or answer assignment to existing drafts) began. Each puzzle idea was assigned two editors, whose responsibilities included vetting the initial ideas, assigning answers from metapuzzles, and making suggestions for revisions. Furthermore, each puzzle was given a codename so that we could refer to it in a consistent and spoiler-free manner (especially since puzzle titles often changed across testsolves).

All editors were spoiled on every metapuzzle, and as a result were able to assign answers (and in some cases, a set of constraints) to puzzle authors without spoiling them on the metas. This allowed us to keep some authors unspoiled on the meta so that their final versions could be testsolved cleanly.

Many of our puzzles were ideated without an answer in mind (and had one assigned after the idea passed the editor check). This was partially due to the structure of Puzzlord, and partially because we did not want to place restrictions on first-time puzzle authors’ ideas. Despite this, there were a few puzzles that were created with an assigned answer as an inspiration, and on average they turned out really well (Physics Test is a great example). Now knowing this, we might be comfortable experimenting with fixed answer lists in the future!

Playmate

We really loved the concept of Teamwork Time (MH 2020) and puzzles like Race for the Galaxy (GPH 2019). As a tribute to our team name, we designed the Ninteamdo Playmate with the end goal of bringing teammates together for a fun break between puzzles. We hoped this would emulate the cooperative feeling of “interactives” typical to physical hunts in a completely virtual, online environment.

The original plan for the Playmate was a single interactive “runaround” comprising multiple minigames, around the length of Barbie’s Murder Party, placed at the very end of the hunt before Badge Collection. As brainstorming went on and our ideas grew, we decided to split them up across the hunt’s main round so that more teams could experience them earlier. As a result, the project ballooned in scope and difficulty into the 8 games we have today.

In designing, we tried to choose a mix of different game types-- some that put on a lot of time pressure, like Ducky Crossing and Barbie’s Murder Party at House on the Hill, while others emphasized more puzzly aspects like Kyoryuful Boyfriend and Mutant Meeples on Ice. We took inspiration from a variety of source material; as you may have noticed, each game combined a distinct toy, card or board game, and video game, sometimes in novel and unexpected ways. Each game was intended to be easier (and hopefully more fun) with more teammates, but still solvable alone (albeit quite difficult).

This project was ambitious in scope and complexity (for gory tech details, see the Playmate Infrastructure section below), and took several months to design and implement. While many of the games could be polished further, we’re happy with the results and hope that teams enjoyed this atypical puzzle hunt experience!

Testsolving

Throughout the course of puzzle development, we aimed to testsolve each puzzle cleanly twice, and most weeks of meetings were spent on individual puzzle testsolving. Each testsolve would provide feedback that improved the puzzle for the subsequent iteration and provided a datapoint for puzzle difficulty. We were able to reach the two testsolve goal for most puzzles before the full-hunt testsolves.

We scheduled 2 full-hunt testsolves, the first occurring 3 weeks before the start of the hunt and the second occurring a week later. This turned out to be a great idea, because it forced us to finalize (and post-prod) as many puzzles as possible, and left us sufficient time to fix any last minute issues that popped up.

Our first testsolve team consisted of partially spoiled puzzle authors and low-commitment testsolvers. Due to the unavailability of many in the group throughout the weekend, we treated this as a good yardstick for our average team. Originally scheduled to take a full weekend, the testsolve ended up bleeding into the Thursday of the following week. As a result of this testsolve, we made several changes to individual puzzles, puzzle unlock order, as well as the puzzle unlock system.

The second testsolve team was made up of people who committed most or all of the weekend for the testsolve. They finished in slightly over 48 hours. We made several more changes to the metas by renaming Alone? Play Along and by adding an intermediate clue phrase in Party Time.

While smaller hunts might have been able to get away with local, individual testsolves, our hunt would have suffered from length/unlock structure issues had we not conducted these full-hunt testsolves. We would like to express gratitude to all our testsolvers, each of whom gave up at least a full weekend to help us smooth out the kinks in our hunt.

Technical Details and HuntOps

Website

The code for the website was forked from the Galactic Trendsetter's open-source gph-site, which was written in Python and Django. In order to make use of interactive Javascript (especially for the Playmate games), we decided to use React instead of Django templates for the frontend, porting all the front-end code to NextJS and React. Much of the core Django logic was reused, but each endpoint was changed to respond with JSON driving React components, instead of directly rendered HTML.

One consequence of using React was rewriting the authentication and unlock system. Every request to the server (whether tied to a puzzle, image, or sound asset) was forwarded to a proxied endpoint in Django that checked whether that puzzle or asset was unlocked. We also had to carefully guard puzzle content in front-end code for puzzles that unlocked content over time like Functional Analysis, SpaceCells, and Puzzle Not Found.

An issue we discovered the day our site went public was leaked urls. The NextJS library precomputes a “manifest” of all URLs at build time, which speeds up navigation, but also includes the URLs of every puzzle, and many hidden links used in Puzzle Not Found and the Playmate. To get around this, we manually built an allowlist of public URLs and filtered out the other compiled URLs using a horrendously ugly regular expression.

Playmate

The Ninteamdo Playmate was one of our most ambitious infrastructure projects. We decided to use Django Channels, an event-based library built on top of websockets and Redis, rather than relying on some of the bigger nodeJS libraries, so that we could better control team allocation and unlock structure. In the backend, we spun up a threaded GameEngine for every game and for every team that ran on a loop at a basic 10 fps. Each client (one websocket connection per browser tab) would spin up a consumer that sent and received events from their team’s GameEngine. React served as our frontend, which handled connections through websockets and rendered data to the HTML canvas on a loop.

Load-testing was a huge priority for us, as we needed to know whether we could support many simultaneous connections from different teams. We used Locust with some custom Python code to test hundreds of users simultaneously connecting to (and “playing”) Ducky Crossing. Through trial and error, we settled on a pool of 50 worker processes on an 8-core machine with 32 GB of RAM. Choosing 50 processes was less for load, and more for safety - if a game engine crashed, it would disconnect every team using it, so using more game engines isolated errors better.

One issue we identified two weeks before the hunt was a leak in database connections. For some reason, each new GameEngine thread opened a connection to Postgres but failed to close it. Despite our best efforts, we never isolated the cause, and ended up implementing a workaround that used connection pooling (via PgBouncer), an expiry time of 12 hours, and a cron job to monitor the number of open connections. Ironically, our frequent deploys the first few days of the hunt (which conveniently restarted the connections) meant this was never an issue. Our budgeted target of hundreds of simultaneous games heavily overshot our true load of 10 to 30 simultaneous games, but we're still glad we figured out the DB connection leak ahead of time, since we were regularly over the default limit.

On the topic of deploys: because we used a single Docker container for each server, every deploy (for errata fixes, etc.) required a restart of the game server, killing all in-progress games. To mitigate this, we set up a downtime alert system and scheduled deploys for 3am PT or later. Unfortunately, the popularity of the games (and the replay value from leaderboards) meant that there were teams constantly playing at every hour of the day. We apologize to any of the teams whose Mutant Meeples on Ice or Mahjong Madness high scores got interrupted. If we run anything remotely similar again, we will definitely split up the servers so that they can be deployed separately.

Finally, we would like to extend a huge thank-you to the developers at Kogan for their extremely helpful blog post. As far as we’re aware, this is the only resource on the web that describes developing multiplayer games through Channels; most other existing projects are either smaller in scope or aimed at chat applications.

Copy to Clipboard

In past hunts, we have found that transcribing data to sheets has sometimes been a barrier to starting new puzzles. In our planning discussions, we proposed early on that we wanted to have a copy-to-clipboard button to make transcribing data easier and faster, especially for puzzles with grids or tables.

The main functionality used Javascript to save an HTML element or lines of text to the clipboard. Directly passing HTML to the clipboard worked in most cases, but sometimes we wrote separate code for the HTML that would be viewed and the HTML that would be copied. Unfortunately, the week before the hunt, we discovered a plethora of issues with Firefox and Google Sheets that required less-than-ideal hacks to work across browsers. Despite these issues, we’re glad that many teams found this helpful.

We’ve released a standalone example, and hope others can avoid some of our pitfalls.

Hints

We used the hint infrastructure that is built into gph-site. This system enabled us to claim hints via either Discord or an internal page and draft responses. The draft page also displayed the most recently awarded hints for that puzzle, to ensure consistency (and allow for easy reuse of hints). In addition, the internal page displayed an internal hint-answering leaderboard and the average response time, intended to motivate us to reduce hint response time and claim more hints.

For consistency, we had a document of pre-written hint responses for possible questions that was further updated during the hunt as puzzle authors discussed hint requests that were not covered by the document. During the hunt, when we discovered a prepared hint was not effective, we would replace it to be clearer and more helpful.

Teammate Hunt was run by approximately 25 teammates. We had discord bots that sent us hint requests and emails, letting us quickly see, coordinate, and make responses. Due to having different sleep schedules and being spread across the Eastern and Pacific time zones, we were able to man hints and emails basically 24/7 during the hunt. While we don’t think this should be an expectation for teams running non-Mystery Hunt hunts, it certainly is nice if your hunt-staff is large enough.

We used a shared Gmail inbox to reply to individual emails and SendGrid for sending mass emails. Our initial plan was to manually create emails for hunt-wide announcements, sending them in batches of 80 BCC’ed email addresses to reduce risk of getting caught by spam filters. This got tedious, and despite our best efforts, Gmail placed a 1 hour timeout on our account when we sent our first hunt-wide announcement. We ended up building internal tools to automate drafting and sending announcements.

During the hunt, emails to non-Gmail addresses didn't work very well. We debugged this to a combined issue between SendGrid and SpamHaus. At the lowest paid tier, SendGrid uses shared IPs, grouping several email sends to come out from a single server. Some prior Paypal scammers had used SendGrid to send phishing emails, and SpamHaus blocked the IP addresses used, blocking email sends from our legitimate account as well. We believe Gmail uses a custom spam filtering system that wasn't affected by this, but many other domains rely on SpamHaus and therefore weren't getting emails from us. We reached out to SendGrid support and they notified SpamHaus to get the IP addresses off the blocklist, but this didn't go through in time.

The recommended solution was to move to a Dedicated IP, but this would have been significantly more expensive (we paid $15/month and the cheapest tier for a dedicated IP was $90/month). All the email services we checked follow a similar system, so we don't think migrating off SendGrid would have fixed this.

Discord

We used Discord webhooks/bots to notify us of emails, answer submissions, hint requests, team photo submissions, and other logged information. This gave everyone easy access to all the streams of information and also gave real-time notifications for things like hints and email. More complex behaviors were done through discord.py.

Puzzle Not Found

Puzzle Not Found was an early puzzle idea that baked itself into our website's design. The inclusion of team photos was incorporated just for this puzzle, but we had a lot of fun watching teams upload jokes, memes, and photos of their pets. If we run another hunt, there's a good chance we'll bring them back. No comment on whether they'll be part of a puzzle or not.

The biggest worry we had for Puzzle Not Found was teams running denial-of-service attacks against our site when trying to get a Too Many Requests error. This luckily never came to pass, although we did see several attempts to send too many requests through other means, including rapidly refreshing the page, updating team members every second, submitting several team photos of spoiled apples, and using up all hints (which we refunded).

Additional Libraries

Because emojis render differently cross-browser, we used the Twemoji library, which replaces emojis with static images (as seen on Twitter or Discord). This ensured a consistent display, which was especially important for puzzles like Cardboard and Kyoryuful Boyfriend.

Inspired by the celebratory mood when solving in Mystery Hunt 2020, we used react-confetti to display confetti when puzzles were solved.

Daylight Savings Time

We ran our hunt across a Daylight Savings Time boundary in the US. Very few DST code changes were required, since the Python libraries we used handled it automatically, but the amount of time spent verifying Daylight Savings Time behavior was large enough that we'd rather not run another event where we have to think about this.

Reflections

Hunt Scope

As mentioned above, the scope of the hunt expanded over the course of the year as we pushed back the intended start of the hunt. Given the size of our team, many of us needed to take days or weeks off to finish parts of the hunt as the deadline approached and we lacked puzzles or Playmate games. In order to avoid this, we could have either scoped down some puzzles or parts of the hunt. We could have also set up more times (separate from weekly meetings) for content creation or helped pair people with ideas with people who had free time to write puzzles. While stricter deadlines may also help, they are difficult to enforce without real consequences.

Puzzle Difficulty, Variance, and Ordering

We wanted the Birthday round puzzles to be easier than those in the Toy Chest round. Outside of this, however, we had no strong guidelines on puzzle difficulty. As more puzzles were written, we realized that the three islands had different difficulty profiles (based on testsolving):

- Matropolis had one outlier puzzle (⭐ BATTLE STAR ⭐) in difficulty, several medium-length but approachable puzzles, and a tough pure meta.

- Pixel Paradise puzzles were either long/difficult (SpaceCells, Read and Write, Yogurt from the Crypt) or on the easier end of the spectrum.

- Tabletop Island had several high-variance reference puzzles (Playing the Field, Love Stories, Powerful Metamorphers) and longer puzzles (The Seven Empires, Fire-Quackers), but was capped off by a relatively simple meta that enabled easy backsolving.

At this point, most of the puzzles and answers were set, so we could only make minor tweaks to individual puzzles and reordering within rounds. Since the metapuzzle unlocked after 6 solves, any ordering would work for Matropolis (especially since the meta was hard). For Pixel Paradise, we placed the longer puzzles in the middle so that more of them would be solved before unlocking the meta. For Tabletop Island, we tried to spread the reference puzzles across the round and put the less backsolvable puzzles near the end.

| What we were hoping for | What happened |

|---|---|

| Teams would pick different paths in the Toy Chest round and there would be no obviously easiest island | The first 4 meta solves were from 4 different teams and spanned all 3 metas. By the end, all three islands had similar finish rates. |

| Teams would not have all the difficult puzzles unlocked at the same time. | Many teams did hit a wall of stuck puzzles. |

| Distributing some of the longer puzzles to the beginning and middle would make them less skipped or backsolved. | In all three islands, the last two puzzles were some of the least solved puzzles even though they weren’t the hardest. On the other hand, more teams got to see and were able to forward solve some of the more complex puzzles. |

| The first teams to finish would do so in 1-2 days. | Teammate's Sixth Birthday Implementation finished in a little over 27 hours, and 5 teams finished within the first 48 hours. |

| Our overall difficulty would be somewhat easier than GPH but harder than Puzzle Potluck, REDDOThunt, and similar hunts. | We think we hit this goal pretty well. However, there aren’t many well-known hunts between these two difficulty levels, so unfortunately it was hard to give teams a more precise reference point beforehand. |

Hints

We were initially unsure of how many hint requests we would get and how well-staffed we would be. We were also fairly confident that the top team would finish the hunt before Monday, and wanted top teams to finish before hints were released, so we confirmed Monday as the start of when we would release hints. Through the check-in emails, we found that many teams, some new to puzzlehunts entirely, were stuck on puzzles in the Birthday round, and that they would effectively be stuck on the hunt for the rest of the weekend. This led to our decision to release hints for just the Birthday round on Saturday. In addition, for this period, our team was available enough to respond to follow-up hint requests via email. Likewise, based on feedback and top teams finishing the hunt, we released hints for the entire event a day early (on Sunday). By this point, however, we were unable to support as many follow-up email requests.

We think this was quite successful. By Sunday 6pm EDT, we had responded to almost 300 hint requests, which allowed many teams to see parts of the Toy Chest round before the end of the first weekend.

We believed in focusing on hinting rather than releasing additional puzzles because we felt that time unlocks overwhelmed teams by creating too many available puzzles. The reception to this was mixed, with some feedback being decidedly pro timed-unlock, and we’ll definitely be discussing our approach to this in any potential future hunts.

For hint infrastructure, there were a few quality of life improvements we would have loved. Our internal hint drafting page did not display hints asked by the same team on the same puzzle, so sometimes we’d either miss the context (and give a duplicate hint) or we’d need to spend time finding the appropriate context. We’d like to apologize to the teams who got less useful hints or received duplicate hints. Second, we often asked teams to email back if the hint was confusing or needed clarification -- it would be easier for us, in terms of organization, if this response were also kept within the hint system, since not everyone was logged into the email account and email lacked other features, like displaying the most recent responses to a puzzle.

Stats

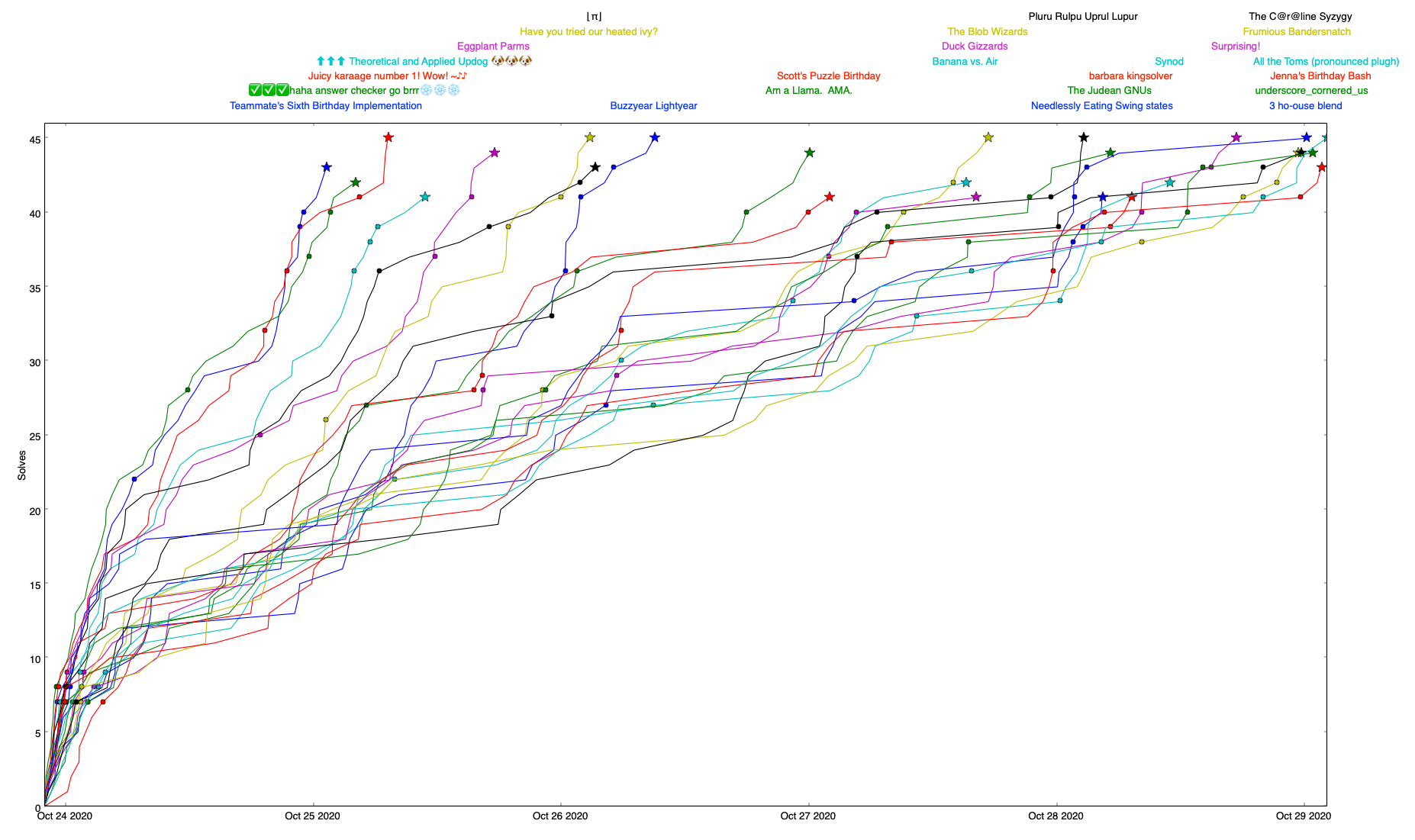

Solve progress of the first 25 teams. Circles are meta solves, stars are metameta solves.

Flourish visualization of solves over time. In this, solving Badge Collection credits a team with 45 solves, even if they did not backsolve everything.

A full guess log is available, as well as a game log. Let us know if you do anything neat! (Note: the game log does not have all game end times, only the end time of the 1st win for each game.) We also have stats for individual puzzles. Finally, we are making our bigboard public, which lists information like placing, number of incorrect guesses, and number of hints used per puzzle.

Hints

We received 2601 hints in total, with an average response time of 4 minutes 45 seconds, and a median response time of 3 minutes 30 seconds.

Functional Analysis

General stats:

- Total submissions: 192804 numbers

- Unique numbers: 19727

- Largest solution: a sequence of 344 7's by Have you tried our heated ivy?

- Smallest number never submitted: 9240

- Smallest number never submitted, excluding guesses by brute-forcers: 422

- 262 teams checked the value of 904.

Extremes:

- Juicy karaage number 1! Wow! ~♪♪ solved the puzzle querying only 65 unique numbers!

- The One and Only solved the puzzle querying only 70 unique numbers!

- Erotic Cake of r/picturegame tested 761 different numbers before solving the puzzle, likely without an automated script.

- Also, they guessed every number from 1 to 120, except 74.

- whoevers checked the value of (f0 0) 5523 times.

- Gundam Guardians checked the value of (f1 1) 58 times.

Brute-forcers:

- Sally brute-forced sets of 100 at a time, with a total of 1048 guesses.

- Traitor Boys brute-forced up to 1000, with a total of 1380 guesses.

- Pusheen Appreciation Society checked every number from 0 to 1000, plus a lot of permutations of 7, 8, 9 prepended with a 6, with a total of 2184 guesses.

- An Attempt Was Made brute-forced up to 1700, with a total of 2219 guesses.

- Assian Assinine Assassassins brute-forced up to 1000, plus a lot of permutations of 9 and 8, with a total of 2809 guesses.

- Cold Storage brute-forced up to 5000, with a total of 5141 guesses.

- Teams potato, 🎃, and whoevers all submitted every number from 0 to the answer.

SpaceCells

- 3157 solutions (unique submissions per team per assignment)

- 1742 assignments solved

| Assignment | Solutions | Solves | Min Cycles | Min Cells | Min Instructions | |||

|---|---|---|---|---|---|---|---|---|

| 1 (Inversion) | 702 | 202 | 61 teams | 16 | 27 teams | 1 | 25 teams | 3 |

| 2 (X Crossing) | 369 | 195 | 40 teams | 16 | 21 teams | 2 | 18 teams | 3 |

| 3 (Unity) | 331 | 182 | 47 teams | 24 | 4 teams | 1 | 23 teams | 3 |

| 4 (Memories) | 267 | 170 | The Wombats | 27 | 3 teams | 1 | Marshmallow Mateys | 8 |

| 5 (Power It Up) | 234 | 155 | 3 teams | 74 | The C@r@line Syzygy | 2 | 11 teams | 11 |

| 6 (Differences) | 202 | 145 | 5 teams | 24 | 6 teams | 1 | 3 teams | 3 |

| 7 (Storage Unit) | 163 | 133 | Magpie | 301 | 3 teams | 3 | 2 teams | 21 |

| 8 (Wheel of Fortune) | 198 | 134 | SPecial oPS | 78 | 7 teams | 2 | White Maria | 18 |

| 9 (Colors of the Rainbow) | 205 | 135 | 2 teams | 19 | 81 teams | 2 | Magpie | 16 |

| 10 (Median Filter) | 177 | 130 | 🍅 Hunt's Teammato Paste | 156 | 5 teams | 4 | 17 teams | 10 |

| 11 (Vending Machine) | 170 | 88 | Magpie | 127 | 2 teams | 2 | 2 teams | 35 |

| 12 (Quick Maths) | 139 | 73 | Newbies | 235 | Teammate's Sixth Birthday Implementation | 1 | Teammate's Sixth Birthday Implementation | 22 |

Puzzle Not Found

- 3899 clicks on "View Solution"

- 575 visits to Payment Required error page

- 502 views of the Too Many Requests comic

- 442 bad team photos

- 952 views of the I'm a Teapot! Page

- 7 sales of Matt & Emma's Mate Tea (so far)

- 6 sales of bAAAAAAAAs (so far)

- 1 team found the 402 error before the hunt started

- 1 team found the 415 error before the hunt started

For more fun submissions related to Puzzle Not Found, see Appendix C.

Playmate

In total, over 11500 games were completed, and thousands more were started but never finished!

- 1336 games won

- 10558 games lost

| Game | Games won | Games lost | Most plays | High scores | ||

|---|---|---|---|---|---|---|

| Ducky Crossing | 439 | 2137 | Time Vultures | 143 | Time Vultures | 52.1s |

| Codemanes | 213 | 3209 | Time Vultures | 217 | underscore_cornered_us | Round 30 |

| Tateroids | 118 | 1242 | Sleep Deprivation | 53 | N/A | |

| Kyoryuful Boyfriend | 125 | 1860 | Les Gaulois | 56 | N/A | |

| Marineteam | 110 | 597 | The Surprise Birthday Infiltrators | 46 | N/A | |

| Mutant Meeples on Ice | 141 | 0 | Eggplant Parms | 13 | See below | |

| Mahjong Madness | 111 | 0 | Eggplant Parms | 10 | See below | |

| Barbie's Murder Party | 79 | 1513 | Sleep Deprivation | 228 | See below | |

High scores for Mutant Meeples on Ice:

| Level | Team | Min moves |

|---|---|---|

| 1 | 20 teams | 8 |

| 2 | 11 teams | 12 |

| 3 | 10 teams | 10 |

| 4 | 2 teams | 21 |

| 5 | Am a Llama. AMA. | 56 |

| 6 | 2 teams | 31 |

| 7 | 11 teams | 17 |

| 8 (glitch%) | 5 teams | 14 |

| 8 (glitchless%) | Killer Chicken Bones | 126 |

High scores for Mahjong Madness:

| Players | Team | High score |

|---|---|---|

| 2 players | Ski Nautique | 72 |

| 3 players | Eggplant Parms | 100 |

| 4 players | Duck Gizzards | 25 |

| 5 players | Duck Gizzards | 21 |

| 6 players | Duck Gizzards | 22 |

High scores for Barbie's Murder Party at House on the Hill:

| Room | Team | High score |

|---|---|---|

| Spirit of Algebra | 3 teams | 15 |

| Discombobulated Contraption | Eggplant Parms | 21 |

| Reanimated Hog | 2 teams | 12 |

| Experimental Horror | 2 teams | 16 |

Misc

There were 28 guesses of TEAMMATE across the entire hunt.

The Future of Teammate Hunt

We plan to keep the hunt available for longevity, with the exception of the Playmate. The Playmate is still up, in case you missed a game you wanted to try. However, after we migrate to a static website, the server backing the Playmate will go down, at which point the games will no longer be playable.

As for future Teammate Hunts, we're not sure what our plans are yet. If we do run another one, we'll be sure to let people know. There’s generally a lot of enthusiasm for future puzzling, but also a lot of exhaustion on the part of the team.

Credits

Puzzle Editors: Edgar Chen, Bryan Lee, Alex Pei, Brian Shimanuki, Liam Thomas, Ivan Wang, Patrick Xia

Playmate Mastermind: Ivan Wang

Puzzle Authors: Edgar Chen, Katie Dunn, Jacqui Fashimpaur, David Hashe, Alex Irpan, Bryan Lee, Cameron Montag, Tom Panenko, Nishant Pappireddi, Alex Pei, Joanna Sands, Margaret Sands, Brian Shimanuki, Steven Silverman, Liam Thomas, Ivan Wang, Rachel Wei, Catherine Wu, James Wu, Patrick Xia, Justin Yokota

Playmate Game Authors: Alex Irpan, Bryan Lee, Alex Pei, Joanna Sands, Margaret Sands, Liam Thomas, Ivan Wang, Rachel Wei, Patrick Xia, Justin Yokota

Artists: Jacqui Fashimpaur, Rachel Wei

Web and Infra: Zhan Xiong Chin, Alex Irpan, Ivan Wang, Nathan Wong

Additional Testsolvers: Herman Chau, Zhan Xiong Chin, Andrew He, Harrison Ho, Dominick Joo, Ashley Kim, Lara Seren, Jonathan Wang, Nathan Wong, Moor Xu

Additional thanks: Galactic Puzzle Hunt for opensourcing their website and Puzzlord

Appendix

Appendix A: Q&A

Logistics / Organization Structure

When did you start planning and what was your general timeline for format outlining/puzzle writing/website design?- January 19: Mystery Hunt ends and we realize teammate has a decent shot at winning in the coming years and our hunt-writing experience is varied. We decide it would be beneficial to try to write a smaller hunt before having to tackle a Mystery Hunt.

- February 5: We start weekly Discord meetings, though it’s slow at first.

- February 26: We settle on the theme of toys and games.

- March 11: Metameta proof-of-concept is done, we settle on having 3 rounds of 8 puzzles in the second part. Playmate games design is ongoing.

- March 25: Initial iteration of the Birthday meta is complete.

- March 28: We run an internal potluck of puzzles. Some of these (Pictionary, 20/20 Vision, Island Metric, and Union of Intersections) would end up in the hunt in some form (though all of them were rewritten).

- April 1: Initial iterations of the Tabletop and Pixel metas are complete.

- April 8: Initial iteration of Matropolis meta is complete. The story gets (somewhat) fleshed out.

- April+: Puzzles are being written.

- May 17: Prototype of first Playmate game is implemented.

- August 19: We launch our site.

- September 28-October 2: “cram week” of miscellaneous tasks: finishing remaining puzzles, story finalization, world and puzzle icon artwork, and creating last Playmate games

- October 2-8: First full-hunt testsolve. About half of the puzzles are implemented as web pages and half only exist as Google Docs.

- October 5: Last puzzle is written. Change unlock structure from a points-based unlock to the current one.

- October 9-12: Second full-hunt testsolve.

- October 10: All puzzles are implemented as web pages.

- October 22: Almost all solutions are implemented as web pages; all puzzles are fact-checked.

- October 23: Hunt starts.

RW: I was awed and inspired by the amount of art in Mystery Hunt 2020’s Penny Park -- in addition to having lovely background art for each round, each puzzle had a charming, unique, and relevant icon. Strong theming was very important to our hunt, so I thought it would be nice to similarly have art for each round and puzzle as opposed to just presenting teams with lists of puzzles.

We decided to make all of the art for the intro round and initial website black-and-white lineart to match the XKCD art style that was so integral to the Birthday round meta. Then, for the main round, we took the opportunity to introduce color and fun thematic art styles -- a cartoon/storybook style for Matropolis, physical watercolors for Tabletop Island, and pixel art for Pixel Paradise. Because we ended up using the icons to match puzzles to tiles for the metameta, almost all the puzzle icons ended up being unrelated to the subject matter of the puzzles themselves. Nevertheless, the art team had a lot of fun drawing everything, and we’re happy that teams enjoyed all the art :)

The idea to add hats to all the puzzle icons to indicate solves came about in the last week, and the idea to add hats to team photos came about in the last few days, so I’m grateful that our web team was indulgent enough to make those additional touches of theming and personalization possible!

It seemed like some of the puzzles were completely standalone, while others required you to google extensively and find specific online resources to help you progress. Was this just different styles by different puzzle creators or was there a way we could know which situation we were in?It was definitely a different styles thing -- it wasn’t even limited to puzzle authors, some puzzle authors did both self-contained and reference-based puzzles.

With puzzles, you never know what will pop up; good puzzles hopefully make the transition feel natural when it does arise.

How many person-hour went into this thing?One teammate had a time-tracker for his teammate-related time numbers, and it totaled 407 hours. Overall, we're guessing somewhere in the thousands? Several individuals spent hundreds of hours or maybe even close to 1k, so the total was probably on the order of 5-10k.

Advice / Recommendations

What advice do you have for people writing their own puzzles and puzzle rounds?If you’re starting out, do a 1 round hunt. 6-10 puzzles and a meta.

Testsolving is so important! You need to get people to testsolve your puzzles, because other people will always approach your puzzles and break them in ways you didn’t expect. It’s highly unlikely that your first draft of a puzzle will be perfect.

Fact-checking: the bare minimum is to make sure that the solution “checks out” and matches what’s in the puzzle (i.e. numbers are correct, indexes are correct, facts are correct, typos). It’s also important to check for things that aren’t there: Is there ambiguity for an answer for a clue? Is there an alternate solve path that leads to a different answer? Is the original puzzle missing anything that should be there? Try to do this early, because it also gives you a feel for how solvers might search for your solution -- it's easy to accidentally write a clue that's almost impossible to solve.

Here are some good posts about writing puzzles:

- Introduction to Writing Good Puzzle Hunt Puzzles by David Wilson

- A series of posts by Foggy Brume

- Suggestions for Running a Puzzlehunt by Rahul Sridhar

RW: If you didn’t get enough from Storytime, here are my two favorite authors & masters of the art of the short story:

- Jorges Luis Borges: “Tlön, Uqbar, Orbis Tertius”, “Pierre Menard, Author of the Quixote”. Seductive, intellectual, wildly inventive. Borges writes like he’s spilling secrets of the universe to a dear friend and confidant. Best read by candlelight in a comfy armchair surrounded by more books stacked high around you.

- Italo Calvino: Invisible Cities, Cosmicomics. Calvino weaves joy and beauty into everything he writes. He captures the childlike feeling of constant discovery and exploration of the new and the magical. Best read on a sunny day under blue skies and fluffy clouds.

I find that both authors engage with the spirit of puzzling, but if you want something more directly related, try Perec or Queneau - here’s a delightful essay from Perec on the art of the crossword.

PX: I don’t have any book recommendations for puzzle-writing (or puzzles in general). The best lessons are learned through experience and mistakes, and doing more puzzles.

Do you have any recommended websites/software for collaborative solving?Within our team, most people use Discord for voice/text communication, and Google Sheets for collaboration that doesn’t require much formatting. For Word Wide Web, we had testsolvers who used both Jamboard and Figma.

Also don’t underestimate the power of an image editing software and screen sharing. For some puzzles (The Seven Empires, ⭐ BATTLE STAR ⭐, Connect the Dots, Badge Collection), it’s enough for one person to be doing the image editing.

Puzzles

Most difficult puzzles to construct?- Physics Test went through something like 6 iterations before we ended up on what we have. The final puzzle is basically unidentifiable from the original, about the only things in common are “science” and “pokemon.”

- SpaceCells took about 200 hours of work.

- Yogurt from the Crypt took at least 50 just for a first draft.

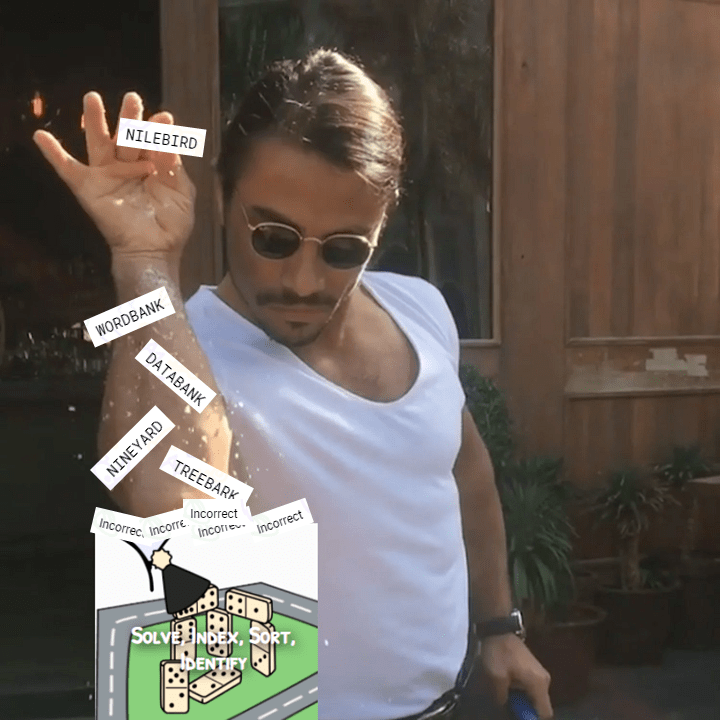

- It wasn't hard, but there were 5 drafts of Solve, Index, Sort, Identify, each with a different list of words, so I redid the work 5 times...by the end I had written scripts to brute force all the constraints and auto-generate nutrimatic queries for the word fill

- None of our puzzles (or games) were perfect in the first iteration; even Exceptions to the Rule, Pictionary, and Icebreaker went through a couple iterations (Pictionary had to be completely redrawn twice!)

The meta was written before the answers were fixed. A few earlier puzzle concepts were rewritten (and in one case, completely redrawn) with an answer adapted to a set of directions (Pictionary, 20/20 Vision, Island Metric), while others were designed specifically around an answer (Exceptions to the Rule, Storytime, Blog).

How constraining was the meta meta on the rest of hunt?The metameta was at least partially responsible for our “Rotting Ship of Theseus” problem, and needing to constrain answers for two metas makes coming up with good answers much harder. It definitely made some of the answers for puzzles written later pretty weird.

I'm always interested in stories of how puzzles changed during testsolving.A lot of the author's notes contain stories about how puzzles have changed over time. In a lot of cases, steps that detract from the puzzle or were too vague were removed or were more clearly hinted.

Where do you think this hunt lies on the general puzzle landscape, and how do you feel about that?We think this hunt is easier than GPH but is closer to it than to other hunts we are familiar with. There’s a wide gap here, and we’re glad to have been able to fill it.

How did you get the words in This Anagram Does Not Exist?It helped to have multiple writers for these clues. SEPTIMORPHIC, the word that started it all, is in the wordlist from thisworddoesnotexist. The rest were glossed from etymology dictionaries and scientific terms.

Were there any puzzles / ideas that got cut during the process of making the hunt? If so, why? And how were they supposed to work?BL: There were several ideas that were cut (on Puzzlord, we had ~60 ideas, but only 37 of them made it into the hunt). We won’t be revealing any of them; we might reuse them in the future

PX: most of the ideas that were cut did not make it through editor check, usually because the author had other puzzles in draft or because it overlapped in genre too much with existing ideas. Only two ideas were cut after answers were assigned, due to concerns of fun and constructability.

Favourite riddles or cryptic crossword clues?- LT: Mosque🐝toes

- IW/BL: Georgia aurally takes in Phrygian dominant scale: we initially joked about using Phrygian as a vitamin (thematic word), then did a double-take when we found out it was actually a musical term.

- IW: Brew in good meal with flight of mango lassi: Not only is “lassi” a suspicious-looking break-in for the Icarus mechanic, but this clue uses three distinct definitions of flight: the act of flying (thematic word for Icarus), a selection of drinks (surface reading), and the act of fleeing (cryptic reading).

- BS: Like 4-down [MISO] and 69-down [ISRAEL]: ASIAN

Misc

Do I know any of you =)?If you recognize any of us in the credits, feel free to poke us and say hi!

What are your favorite longest and shortest anagrams of MEAT?During the interaction for Taskmaster in Mystery Hunt 2019, we were asked for the longest word we could come up with using letters in our team name and we picked... METAMETA.

Favorite anagram is always divisive, but highest consensus has been on META followed by MEAT.

Why does Taylor Swift always appear when I least expect it?You should start expecting Taylor Swift, then she’ll show up less.

How many Swifties are in Teammate?This question we do abhor, because we Swifties number only four!

Is it TMH or TPH?It’s Teammate Hunt ;)

Have you tried simulating a Turing Machine using SpaceCells?SpaceCells would be Turing complete if you had an infinite grid. I hope the progression of assignments made it apparent that this is so.

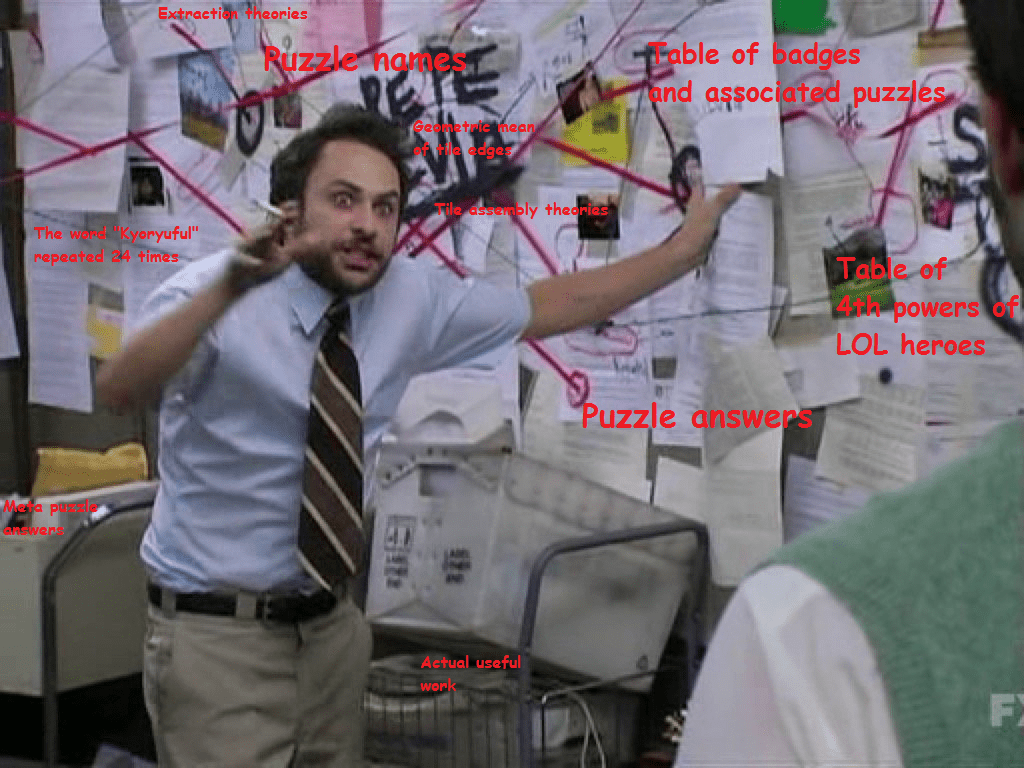

Appendix B: Memes

To take a break from solving Badge Collection, Jules-Auguste set us a bunch of memes, which nerd-sniped us as soon as we realized what they had done. For more, see this drive.

Fanart of glitch% for Mutant Meeples on Ice

how-people-react-to-how-you-spend-your-free-time.png

TBH, this might be easier than using voice chat for larger groups...

Solve, Index, Sort, Identify was not that backsolvable, but that didn't stop teams from trying.

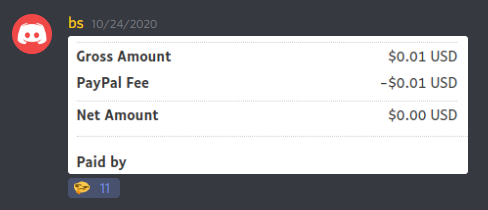

For context, we know this donation was from a team that had already found the Payment Required error.

We do wish we could have seen teams' reactions to the first game of Tateroids! In testsolving, our testsolvers were consistently like "I can't believe you've done this."

Extraction Line: During a testsolve of The Seven Empires, our testsolvers asked themselves (paraphrased):

“Do you think we need the double arrow line at the bottom?"

"No, it’s the extraction line, we won’t need it until the end."

"Is that a real thing?"

"It’s totally a real thing.”

The meme of a horizontal line somewhere in the puzzle being only useful for extraction came back in later puzzles like Yogurt from the Crypt, Powerful Metamorphers, and This Anagram Does Not Exist.

Appendix C: Guesses

Puzzle Not Found

Puzzle Not Found gave teams a lot of leeway for joke submissions.

Media References

- “Achy Breaky Heart” (Duck Soup)

- “All Star” (COmet aTTACK)

- Bee Movie script (17th Shard, [URGENT])

- Moby Dick, first paragraph (Jules-Auguste)

- Rickroll (Jules-Auguste, Teammate's Sixth Birthday Implementation, Time Vultures, The Puzzledome)

- Our FAQ (Esoteric Coteries of /r/PictureGame, [URGENT])

- “We Didn't Start the Fire” (Totally Sus Birthday Invitation)

What we think are responses to the Bad Apple!! photo

- PLAYTOUHOUSCRUBS (🅱️uzzle Idiots)

- ANIMEWASAMISTAKE (Puzzled Pandas) (editor's note: Touhou is not an anime!)

- Various names of Touhou characters (Every Puzzle is 🍰)

Jokes

- 17th Shard:

- KNOCKKNOCK

- WHOSTHERE

- WHO

- WHOWHO

- IMSORRYIDONTSPEAKOWL

Advertisements

- IWISHIHADAPODCASTTOPLUG (Dank Poets Society)

- BIDENFORPRESIDENT (Jules-Auguste)

- cowpeabara:

- INTEGIRLSPUZZLEHUNT

- FORANYONEWHOIDENTIFIESASFEMALEORNONBINARY

- STARTSONNOVEMBERTH

- THEPUZZLESWILLBEGOOD

- ENOUGH

- IFYOUIDENTIFYASFEMALEORNONBINARY

- YOUSHOULDSIGNUP

- WWWINTEGIRLSORGPUZZLE

- THETHEMEISSCIENCECONVENTION

- SINCEISTILLHAVEACOUPLEOFSUBMISSIONSLEFT

- THANKYOU

- BRIANANDPATRICK

- FORTESTSOLVINGOURHUNT

- SORRYBRYAN

Some of the more desperate pleas:

- MYTEAMMATESAREGOINGTOKILLMEIFTHISISNTTHERIGHTTHINGTODO (⌊π⌋)

- PUTUSINWRAPUPPLEASE (Keep Puzzling)

Non-answer checker attempts:

- natto sent us a hint request of 5449 requests of the form ‘I request a _____’.

Kyoryuful Boyfriend

We were curious to see which of the following accepted answers would be most popular.

Act IV - Kawaii Handmaid (oωo✿)

- DELAWARE: 27 guesses (16.77%)

- HAWAII: 134 guesses (83.23%)

Act V.i - Prince Woodsworth the Fourth

- JOHANNESKEPLER: 61 guesses (29.90%)

- GALILEOGALILEI: 139 guesses (68.14%)

- GALILEOGALLILEI: 1 guess (0.49%)

- GALILEOGALILEE: 3 guesses (1.47%)

Act V.iii - The Countess

- FAMILY: 112 guesses (88.89%)

- ILYFAM: 6 guesses (4.76%)

- ILOVEYOUFAM: 8 guesses (6.35%)

Appendix D: Hypercube Gallery

Teams visualized the final hypercube in several different ways. We've compiled a hypercube gallery here.Appendix E: Recipe Book

Thanks to all of the teams who sent in their lovely potion recipes, teammate has been able to compile an Alchemystery! Recipe Book to share with you all. Cheers!

Appendix F: Love Story Submissions

Thanks to all the teams who shared clips reenacting scenes from a Taylor Swift music video. We’ve compiled a few of our favorites here!

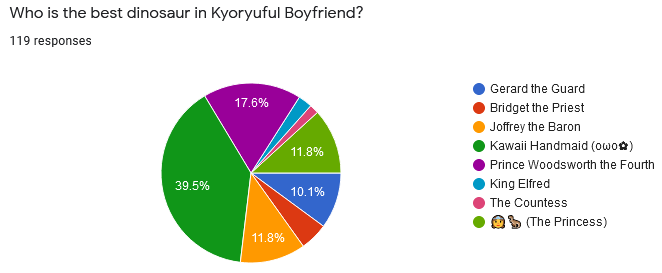

Appendix G: Who is Best Dino?

It was a hard fought battle, but the Kawaii Handmaid (oωo✿) took the win with a commanding 39.5% plurality of the vote. Prince Woodsworth the Fourth was 2nd, while Joffrey the Baron and 👸🦖 (The Princess) tied for 3rd.